AI Companion

Concept:

Inspired by classic non playable companions that players see and interact with in many popular titles (Dragon’s Dogma, God of War, Bioshock Infinite, etc), I approached this project wanting to replicate and understand the core mechanics behind what an AI companion does and implement a prototype of such a companion in Unreal Engine.

Core Mechanics:

In many of these games, depending on the genre, AI companions execute a plethora of mechanics from combat to additional abilities to exploration and when creating this prototype I needed to filter through this list of mechanics and dumb down the exact features I wanted to try and implement. I decided to forego any mechanic related to combat and or abilities and focus entirely on exploration in a third person world. The mechanics I settled on are as follows:

Follows the player as they run around

Explores the area in a radius around the player

Reacts to specific PoIs (Points of Interest) when the player moves towards these objects

Now that I have a focus on the core mechanics I want to implement for this companion, let’s dive into them step by step.

Following the Player:

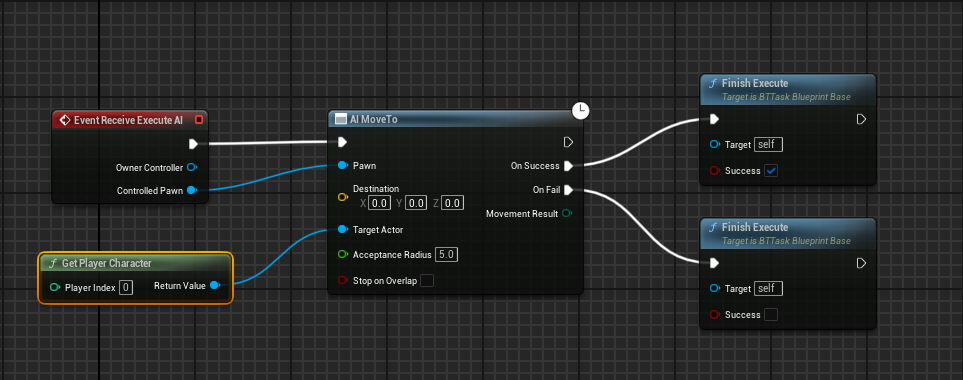

Having the companion simply follow the player character is relative straightforward and is in fact where we’ll start out implementation. By creating a standard AI controller and behavior tree, we can define a behavior tree task that makes the AI move to the target actor’s location (in this case, our player) as shown below.

The most glaring problem of following the player like this is that it feels very unnatural and clunky with the AI companion practically glued to the back of the player and bumping into them whenever they stop or turn. In order to make it feel more organic and like an actual companion, we need the AI to predict the position of the player as they move and move alongside them.

The simplest way to achieve this is through Request Pawn Prediction Event with the predicted actor as our player character. Using AI Perception, we can then listen for this stimuli source and retrieve the predicted location of our player in 2 seconds. We can then set this location as a vector value to a blackboard key for our companion to move to in the behavior tree. Using a timer allows the companion to check for an updated location every second.

Exploration and Pacing:

The prediction of the player’s movement is a good first step in the right direction. But there’s a lot more we can do to simulate organic movement instead of the companion simply beelining for the prediction point. By adding some pacing to the companion’s movement and also an element of randomness to their endpoint, the companion would seem a lot more like its own entity rather than just another object following the player. It also would ensure that the companion doesn’t always end up directly in the path of the player.

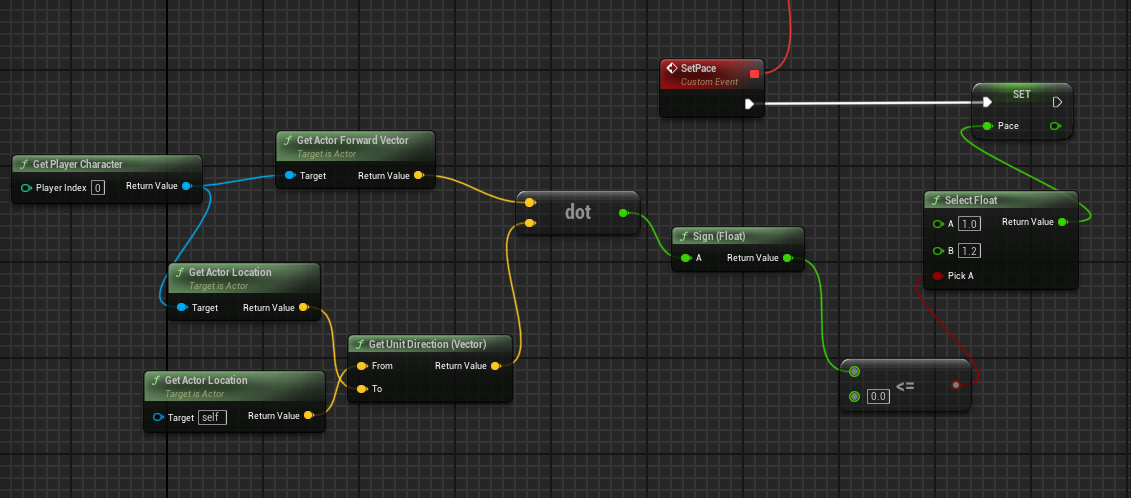

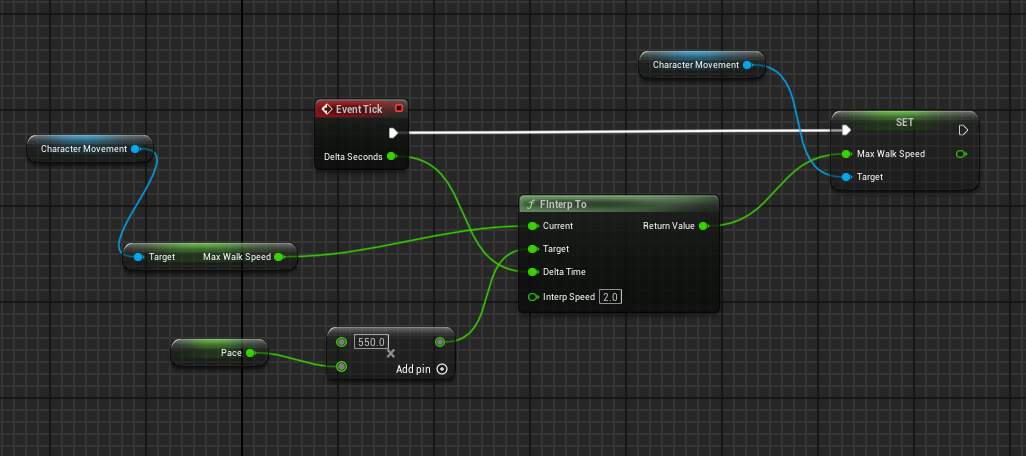

In terms of pacing, we can make sure the companion never reaches the prediction point and abruptly stop as the player is moving by changing the speed to be faster than the player when behind the player and slower than the player when in front.

We can also introduce randomness to the endpoint of the AI companion by adding a radius around the prediction point that the companion will choose a random target location in. That way their movements are never consistently the same and emulate organic random movement.

Points of Interest

Now that we have the companion’s basic movement set up, I wanted to expand on the exploration aspect by having the companion search the space and bring the player’s attention to various points of interest. In an in-game setting, this could be used to progress story points or bring attention to important interactibles for the player to explore.

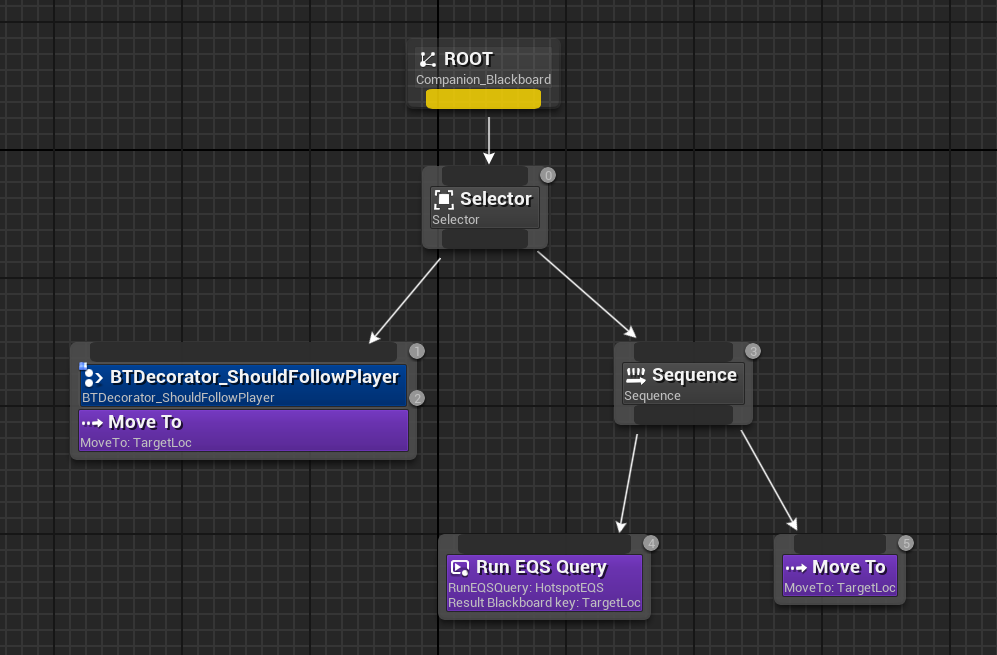

By setting up an EQS query in the behavior tree of the AI, we can have it check by distance for certain points of interest and also run a check through a decorator to see if the AI companion should follow the player or move to the point of interest. For implementation purposes, the conditions checked are if the player is not moving and if the player is not farther than a certain distance from the companion.

On top of this we can add gameplay tags to various points of interest to give them a priority system in the EQS query instead of the companion moving to just the closest one. We can even have the companion react differently to each point of interest by adding specific animation montages based on the gameplay tag associated with that point of interest like below.